AI and the future of work

It is impossible to not have noticed the flurry of activity surrounding the new generation of AI technologies in the last 12 months.

Whilst AI has been around in one form or another for decades, the last 12 months or so have seen massive gains in the space of Generative AI – those systems that can create data from a simple prompt – be that via a line of text, a seed image or the spoken word.

What does the future of the workplace with AI look like? Well, no-one knows for sure, but one thing is certain – it will be quite different from the workplace today. The question is – How different?

The latest Global CEO Survey from PriceWaterhouse Coopers (PwC) asked CEOs from hundreds of companies about their thoughts of the future, and AI naturally formed part of that survey.

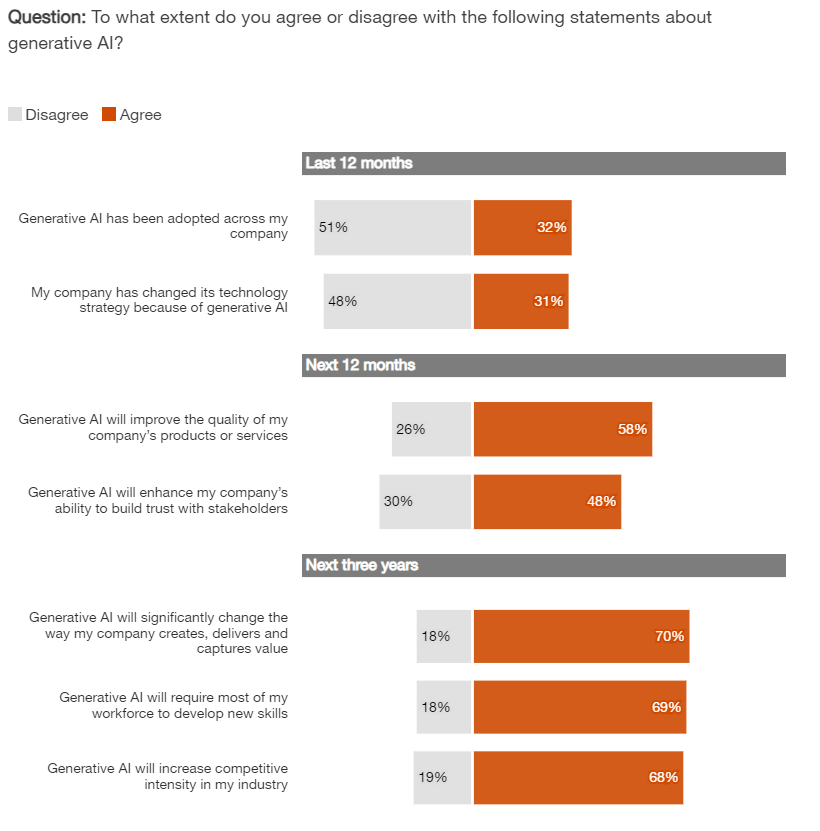

CEOs in the survey appear to believe in both the fast pace of generative AI adoption and its outsized potential for disruption. Over the 12 months, about half of CEOs surveyed expect generative AI to enhance their ability to build trust with stakeholders, and about 60% expect it to improve product or service quality.

Within the next three years, nearly seven in ten respondents also anticipate generative AI will increase competition, drive changes to their business models and require new skills from their workforce.

CEOs who say they have adopted generative AI across their company are significantly more likely than others to anticipate its transformative potential over the next 12 months, as well as over the next three years.

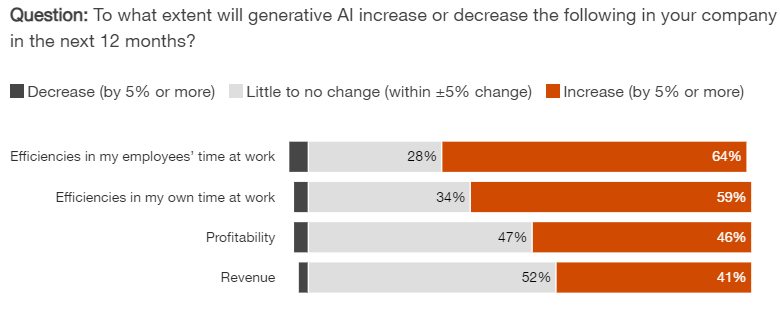

At a societal level, the effects of generative AI are still uncertain. Some of the efficiency benefits for companies do appear likely to come via employee headcount reduction—at least in the short term—with one-quarter of CEOs expecting to reduce headcount by at least 5% in 2024 due to generative AI.

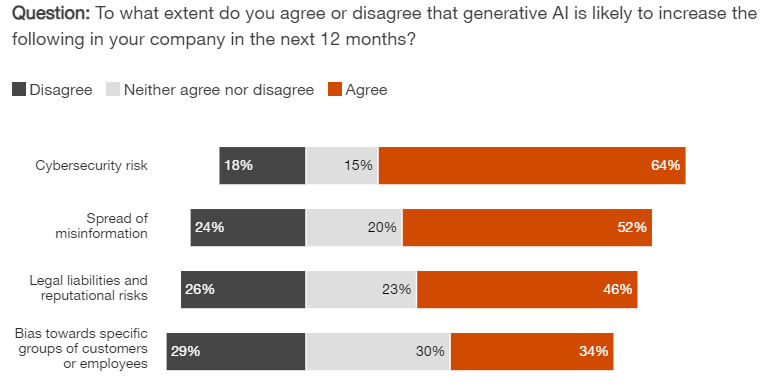

Even as the momentum of generative AI surges, a range of experts in the field are voicing concerns over the potentially significant, unintended consequences that could emerge as its reach grows. CEOs reflected similar sentiments in their responses to the survey.

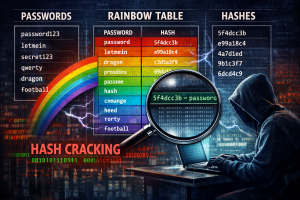

When it comes to generative AI, CEOs are most concerned about cyber security risk—and over half agree that it is likely to increase the spread of misinformation in their company. One-third of CEOs also expect generative AI to increase bias towards specific groups of employees or customers in the next 12 months.

Early days of AI

The earliest substantial work in the field of AI was conducted in the mid-20th century by Alan Turing. In 1935 Turing described an abstract computing machine consisting of a limitless memory and a scanner that moves back and forth through the memory, reading what it finds and writing further symbols.

The actions of the scanner are dictated by a program of instructions that also is stored in the memory in the form of symbols. This is known as Turing’s stored-program concept.

In 1947, Turing delivered the first public lecture which mentioned computer intelligence saying “What we want is a machine that can learn from experience,” and that the “possibility of letting the machine alter its own instructions provides the mechanism for this.”

In 1945 Turing predicted that computers would one day play very good chess, and in 1997, Deep Blue, a chess computer built by IBM, beat the reigning world champion, Garry Kasparov, in a six-game match.

Deep Blue wasn’t the first AI to be used to play a game – In 1951 Christopher Strachey designed and built a checkers (draughts) program which ran on the Ferranti Mark I computer at the University of Manchester. By the summer of the following year the program could play a complete game of checkers at a reasonable speed.

The first AI program to run in the United States also was a checkers program. In 1952 by Arthur Samuel wrote a program for the prototype of the IBM 701, and over a period of years considerably extended it.

In 1955 he added features that enabled the program to learn from experience.

Since those early pioneers, many others have followed producing various systems including:

- 1955 – Logic Theorist – A system that went on to prove 38 of 52 theorems of Principa Mathematica

- 1960 – 1962 – ADELINE & MADELINE – Two of the first neural network AI systems

- 1960 – 1963 – MINOS & MINOS II – more neural network AI systems that could recognise handwriting and symbols on maps

In the 1970s, AI was subject to critiques and financial setbacks.

AI researchers had failed to appreciate the difficulty of the problems they faced. Their optimism had raised public expectations impossibly high, and when the promised results failed to materialise, funding targeted at AI nearly disappeared – This has become to be known as the First AI Winter which lasted from 1974 to 1980.

The AI boom & the 2nd AI winter

In the 1980s a form of AI program called “expert systems” was adopted by corporations around the world and knowledge became the focus of mainstream AI research.

An expert system is a program that answers questions or solves problems about a specific domain of knowledge, using logical rules that are derived from the knowledge of experts.

Because Expert systems restricted themselves to a small domain of specific knowledge and their simple design made it relatively easy for programs to be built and then modified, the programs proved to be useful: something that AI had not been able to achieve up to this point.

In 1980, an expert system called XCON was completed at Carnegie Mellon University for DEC (Digital Equipment Corporation) – By 1986 it was saving the company 40 million dollars annually.

On the back of this enormous success, corporations around the world began to develop and deploy expert systems and by 1985 they were spending over a billion dollars on AI, most of it to in-house AI departments.

However, this boom was fairly short-lived – many companies throughout this period failed, and the finger of suspicion started to point to the AI systems those companies had heavily invested in – the perception was that the technology was not viable. And so we entered the Second AI winter between 1987 – 1993.

AI successes and the second AI boom

In the early 1990’s, compute power had improved greatly, and with it the capabilities of AI systems, In 1997 AI got a huge popularity boost – The chess match where Gary Kasparov was beaten by IBMs Deep Blue system. It is reported that Deep Blue was processing 200 million possible moves per second during the match.

In 2005, a Stanford robot won the DARPA Grand Challenge by driving autonomously for 131 miles along an unrehearsed desert trail, and just two years later, a team from Carnegie Mellon won the DARPA Urban Challenge by autonomously navigating 55 miles in an Urban environment while adhering to traffic hazards and all traffic laws.

In 2011, in a Jeopardy! quiz show match, IBM’s Watson AI, defeated the two greatest Jeopardy! champions by a significant margin.

By 2016, the global market for AI-related products had reached in excess of $8 billion USD. Mainly driven by advances in Big Data processing and advanced machine learning techniques.

Advances in deep learning (particularly deep convolutional neural networks and recurrent neural networks) has driven progress greatly in the last 10 years and research in image and video processing, text analysis, and speech recognition has led to the creation of the new breed of AI – Generative AI.

AI Models such as GPT-3 (Generative Pre-trained Transformer) released by OpenAI in 2020, and Gato released by DeepMind in 2022, have been described as important achievements of machine learning.

GPT-4 has since become the breakthrough AI product in recent times. Launched on March 14, 2023, GPT-4 was initially made publicly available via the paid chatbot product ChatGPT Plus, and via OpenAI’s API.

Unlike its predecessors, GPT-4 is a multimodal model which means it can take images as well as text as input which gives it the ability to produce results such as describing the humor in a cartoon, the subject of a photo, summarise text from screenshots, or even answer exam questions that contain diagrams.

When instructed to do so, GPT-4 can interact with external interfaces. For example, the model could be instructed to enclose a query within <search></search> tags to perform a web search, the result of which would be inserted into the model’s prompt to allow it to form a response. GPT-4 can also be useful for assisting in programming tasks, such as finding errors in existing code and suggesting optimisations to improve performance.

Passing exams

GPT-4 has demonstrated aptitude on several standardised tests.

OpenAI claims that in their own testing the model received a score of 1410 on the SAT test, 163 on the LSAT test, and 298 on the Uniform Bar Exam. GPT-4 has also passed an oncology exam, an engineering exam, and a plastic surgery exam.

ChatGPT

ChatGPT was launched by OpenAI on November 30th 2022, initially free to the public and the company had plans to monetize the service later. By December 4, 2022, ChatGPT had over one million users making it the fastest-growing consumer application to date.

GPT-4, was released on March 14th 2023 and was made available via an API and for premium ChatGPT users.

In September 2023, OpenAI announced that ChatGPT “can now see, hear, and speak“. ChatGPT Plus users can upload images to the AI, while mobile app users can talk to the system.

In January 2024, OpenAI launched the GPT Store, a marketplace for custom chatbots derived from ChatGPT. At launch, the GPT Store offered more than 3 million custom chatbots, a figure which is rising daily.

3rd AI boom

Are we in a the middle of a 3rd AI boom? Is there another AI Winter on the horizon?

Who knows – one thing is certain, AI is transforming many sectors and is set to continue for the foreseeable future – how that future shapes up is yet to be seen.

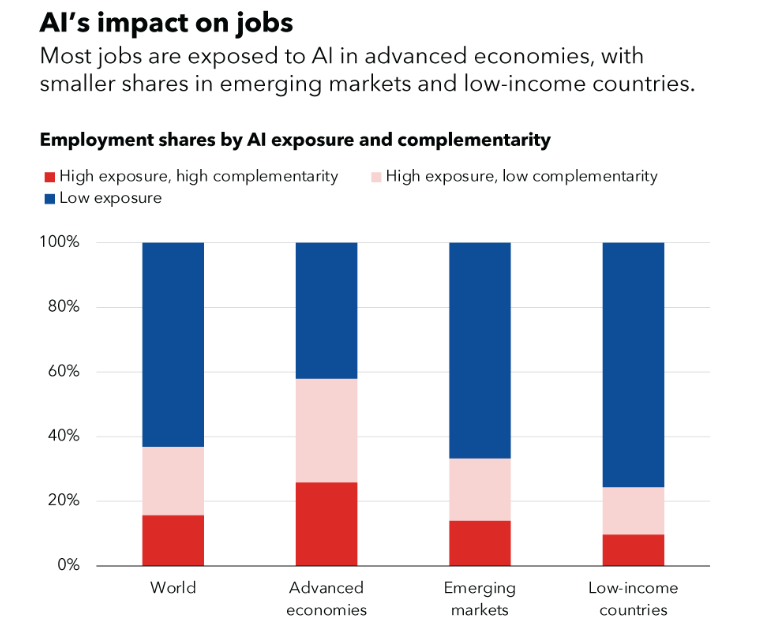

The recent report by the International Monetary Fund (IMF) shows that globally, 40% of all jobs will be impacted by advances in AI – whether that be good or bad all comes down to decision makers

In most scenarios, AI will likely worsen overall inequality, a troubling trend that policymakers must proactively address to prevent the technology from further stoking social tensions. It is crucial for countries to establish comprehensive social safety nets and offer retraining programs for vulnerable workers. In doing so, we can make the AI transition more inclusive, protecting livelihoods and curbing inequality.

IMF blog – AI will transform the world

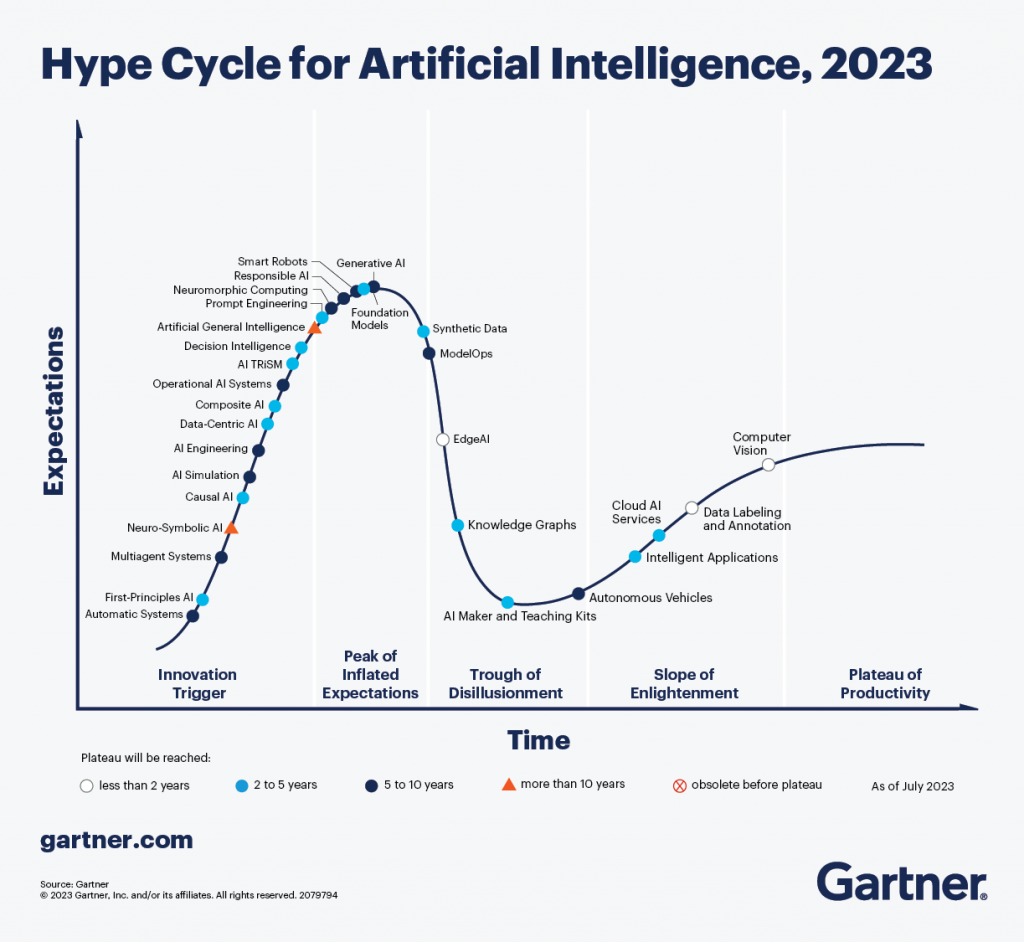

The Gartner 2023 AI hype cycle puts Generative AI at the top of the curve at the moment, but as with all things, that will eventually slide down to the trough of disillusionment at some point where the novelty of generating content such as a 2000 word essay, or a funny image from a prompt wears off – eventually it will become a mainstream tool used by some, ignored by others. On that road though are some tough decisions to be made, both from a technical perspective but also from a morals and ethics one too.