(22/09/23) Blog 265 – Microsoft researchers accidentally expose 38TB of data

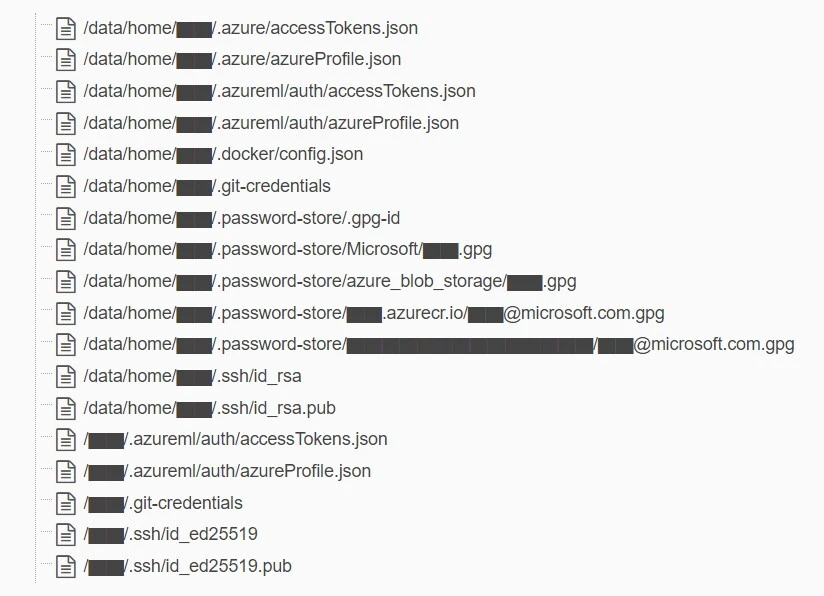

A team of researchers from Microsoft’s AI division published a bucket of open-source training data on GitHub, accidentally exposed 38 terabytes of additional private data – including a disk backup of two employees’ workstations.

The backup includes secrets, private keys, passwords, and over 30,000 internal Microsoft Teams messages.

The researchers shared their files using an Azure feature called SAS tokens, which allows you to share data from Azure Storage accounts. The access level can be limited to specific files, however, in this case, the link was configured to share the entire storage account — including the 38TB of private data.

This incident is a good example of the new risks organisations face when starting to leverage the power of AI. As more software engineers work with massive amounts of training data, said data require additional security checks and safeguards.

The accidental data exposure was discovered by researchers at wiz.io – a cloud security company dedicated to helping organisations create secure cloud environments that accelerate their businesses.

The team had been scouring the Internet for insecure data repositories when they stumbled across the GitHub repository being used by Microsoft’s AI research division.

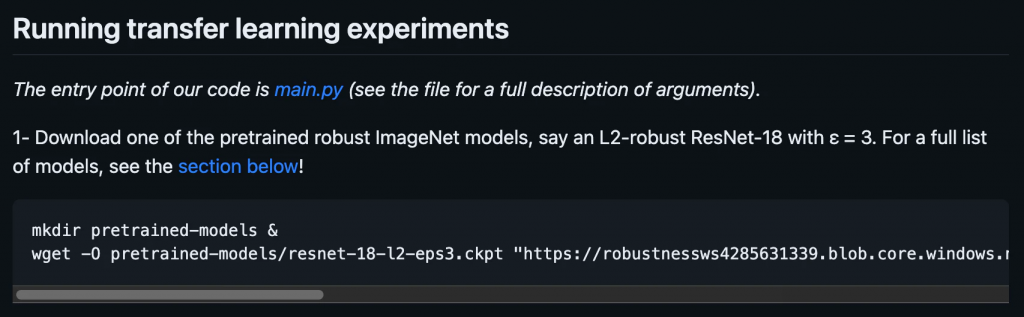

The Git repository was being used to provide open-source code and AI models for image recognition and users of the repository were instructed to download the model from an Azure storage URL.

The URL however, allowed access to more than just open-source models. It was miss-configured to grant permissions on the entire storage account, exposing the additional private data by mistake.

The researchers at Wiz.io also discovered other sensitive data, including the transcripts of over 30,000 Teams meetings and chats.

Another blunder discovered byu the team at Wiz.io was that as well as the exposure of the data, the access control settings were configured to “Full control” as opposed to “read-only”, meaning that a malicious actor could have taken over the data and both delete and edit the contents of the folders.

Insecure SAS Token

The access to the data was made possible via an insecure Shared Access Signature (SAS) Token.

In Microsoft Azure, an SAS token is a signed URL that grants access to Azure Storage data.

The access level of the Azure storage can be customised by the user; with permissions ranging between read-only and full control.

The scope of the permissions can be either a single file, a container, or an entire storage account. The expiry time is also completely customisable, allowing the user to create never-expiring access tokens.

This flexible approach to access control provides great agility for users, but it also creates the risk of granting too much access; in the most permissive case, the token can allow full control permissions on the entire account, forever.

This is the case here with the accidental exposure – Someone created a token with the highest level of access possible, effectively opening up the entire data to anyone who had the token – which was included in the URL shared on GitHub.

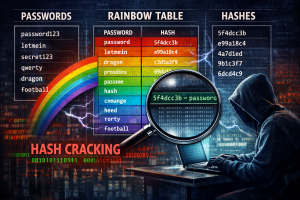

The below image really sums it all up I think…