(09/08/23) Blog 221 – Acoustic side channel attack on keyboards

A paper has been released by three University researchers which looks at side channel attacks against data typed on keyboards using acoustics.

Joshua Harrison, Ehsan Toreini, and Maryam Mehrnezhad are researchers from Durham University, The University of Surrey, and the Royal Holloway University of London respectively and published their paper on August 3rd 2023

Highly accurate

Whilst not a new concept, acoustic side-channel attacks have been a thing for many years – the research conducted for this paper was novel in that the data collected acoustically was then used to train an AI model to be able to predict future collections with a high degree of accuracy.

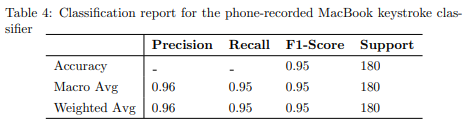

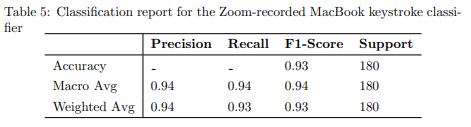

For the paper, the students recorded keystrokes via a mobile phone microphone and were able to achieve a 96% accuracy from their deep-learning language model. In a second test, the students recorded keystrokes via a Zoom conference call and achieved a 93% accuracy.

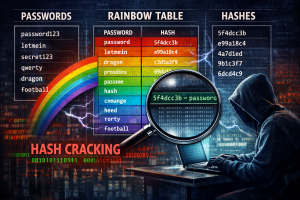

This level of accuracy poses issues for people typing in sensitive data such as passwords, or payment information, etc. whilst having a mobile phone nearby. or whilst in a virtual meeting.

Training data

In both set of experiments (via phone and Zoom), 36 of the laptop’s keys were

used (0-9, a-z) with each being pressed 25 times in a row, varying in pressure

and finger, and a single file was generated containing all 25 presses.

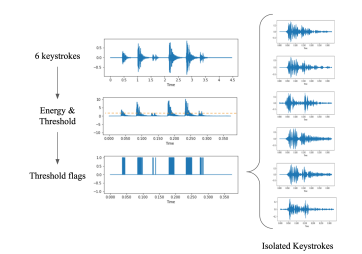

Once all presses were recorded, a function was implemented with which individual keystrokes could be extracted. A fast Fourier transform was conducted on the recording and a summing of the coefficients across frequencies was used to get ‘energy’.

An energy threshold is then defined and used to signify the presence of a keystroke.

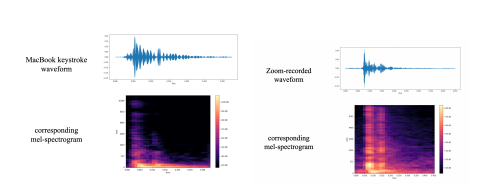

Waveforms were also converted to spectrograph data

Experiments

The data produced from the keystroke recordings, and subsequent waveforms & spectrogrpahs were used to train a Deep Learning Language Model.

The model used in the experiment was CoAtNet which is a family of hybrid models developed at Cornell University which has already proven itself to be highly accurate with image identification, achieving an accuracy of 90.88% in 2021 when classifying 1,000 images.

The students implemented CoAtNet using a Python implementation known as PyTorch.

The “victim” for the experiments was using a Apple MacBook Pro 16″ with 16Gb RAM and the Apple M1 Pro Processor – this design features a standard keyboard used on most Apple devices produced in the last few years.

The mobile phone used for the acoustic capture was an iPhone 13 mini placed on a microfibre cloth 17cm away from the MacBook Pro. Recordings were made in stereo with a sample rate of 44100Hz at 32 bits per sample.

When using Zoom, keystrokes were recorded using the built-in function of

the video conferencing application Zoom. The Zoom meeting had a single participant who was using the MacBook’s built-in microphone array

Results

Data recorded via the mobile phone produced an accuracy of 96%, whilst the Zoom data produced an accuracy of 94%.

The students produced a third set of results obtained via the Skype software – in the same conditions as the Zoom experiment. That produced a set of results with a 91.5% accuracy.