(17-01-23) Blog 17 – Heimdall WiFi Radar

A post popped up on my Twitter feed the other day which sparked my interest, so I thought I’d look a bit more into it.

Twitter user Galileo (@G4lile0) has posted that they had developed a system which tracks people through walls using WiFi devices.

In his tweets, @G4lile0 explains that they used a series of reasonably cheap ESP8266 microchips which have WiFi capabilities, and a technique called trilateration to receive the signals from several of these transceivers

By knowing the locations of the transceivers and the signal RSSI (Received Signal Strength Indicator) they were able to approximate the position of the emitter to feed into an AI system.

This allows them to generate imagery of people in a room from the WiFi signals

In his tweets, @G4lile0 stresses that they did not invent this technology, but that they based it on work already conducted by others. They go on to say that they just wrote the code for others to run themselves.

@G4lile0 has released all their source code for the project on GitHub for anyone interested in building their own system.

Standing on the shoulders of giants

@G4lile0 has built a working system based on the work detailed in a paper titled “DensePose from WiFi” produced by Jiaqi Geng, Dong Huang, and Fernando Del La Torre at Carnegie Mellon University.

The authors of the paper state:

“Advances in computer vision and machine learning techniques have led to significant development in 2D and 3D human pose estimation from RGB cameras, LiDAR, and radars. However, human pose estimation from images is adversely affected by occlusion and lighting, which are common in many scenarios of interest.

Radar and LiDAR technologies, on the other hand, need specialized hardware that is expensive and power-intensive. Furthermore, placing these sensors in non-public areas raises significant privacy concerns.

To address these limitations, recent research has explored the use of WiFi antennas (1D sensors) for body segmentation and key-point body detection.

This paper further expands on the use of the WiFi signal in combination with deep learning architectures, commonly used in computer vision, to estimate dense human pose correspondence.

We developed a deep neural network that maps the phase and amplitude of WiFi signals to UV coordinates within 24 human regions.

The results of the study reveal that our model can estimate the dense pose of multiple subjects, with comparable performance to image-based approaches, by utilizing WiFi signals as the only input. This paves the way for low-cost, broadly accessible, and privacy-preserving algorithms for human sensing.”

DensePose From Wifi

In the paper, the authors state that identification of the human form using RF technologies is not new and their work is built upon previous studies which utilise radar and lidar.

They explain that one existing system was able to estimate the human form in an indoor setting to a resolution of 8.8cm but required specialised hardware and operates on non-standard frequencies. The papers authors wanted to utilise the existing IEEE 802.11n/ac standards which current WiFi system conform to.

Additionally, the authors of the paper state that existing systems are only able to detect and classify single entities. They were seeking to be able to identify multiple entities with their system.

“Recently, Fei Wang et.al. demonstrated that it is possible to detect 17 2D body joints and perform 2D semantic body segmentation mask using only WiFi signals. In this work, we go beyond by estimating dense body pose, with much more accuracy than the 0.5m that the WiFi signal can provide theoretically. Our dense posture outputs push above WiFi’s

DensePose From Wifi

signal constraint in body localization, paving the road for complete

dense 2D and possibly 3D human body perception through WiFi.”

The CSI (Channel State Information) they receive from the WiFi transmitters are processed to separate out the amplitude and phase data which are then cleaned of any signal noise.

This data is then passed through a series of encoder-decoders to build a 2D map of the environment. This data is then fed into their AI system (WiFi-DensePose RCNN) to build up the human images.

DensePose From WiFi – Jiaqi Geng, Dong Huang, Fernando De La Torre

DensePose From WiFi – Jiaqi Geng, Dong Huang, Fernando De La Torre

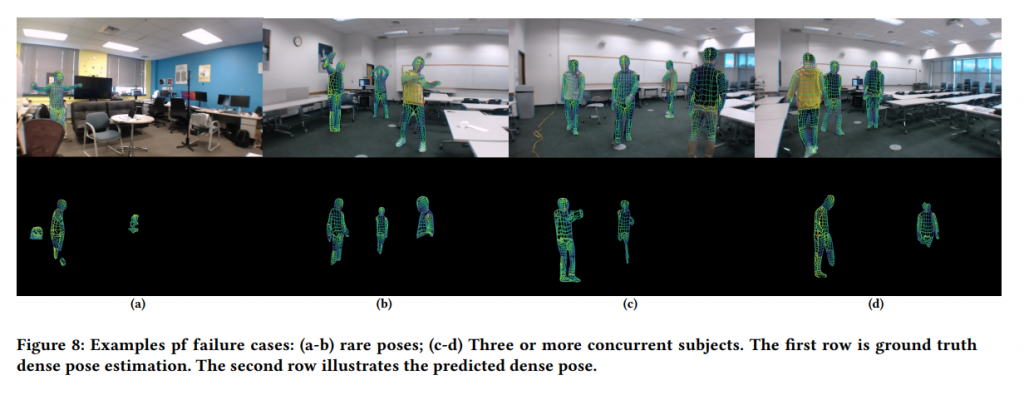

In the paper, the authors share imagery from the system they built which clearly shows the form of people in a room

The initial tests produced a number of errors whereby the system did not detect people correctly, and in some cases identified objects incorrectly as humans

DensePose From WiFi – Jiaqi Geng, Dong Huang, Fernando De La Torre

With numerous rounds of training however, the AI system was able to become much more accurate, as the image below shows:

Fascinating stuff I’m sure you will agree!